The guide to data transformation

8min • Last updated on Aug 20, 2025

Olivier Renard

Content & SEO Manager

A recent Splunk study reveals that 55% of data collected by businesses consists of dark data. In other words, this is stored but unused information.

Without an appropriate process, this data remains unexploited, limiting business performance.

Key Takeaways:

Data transformation makes information usable. It involves cleaning, structuring, and converting data to extract value.

Different techniques exist depending on needs. Cleaning, merging, enriching, segmenting, anonymising. Each method serves a specific purpose.

The right tools facilitate transformation. ETL, ELT, programming languages, and data warehouses help automate these processes.

A key factor for business performance. Consistent, high-quality data improves decision-making, scalability, and governance.

🔍 What is data transformation, and why is it essential? Discover the challenges, methods, and tools to transform your data and turn it into a performance driver for your business. 🚀

What is data transformation?

Data transformation refers to processes aimed at modifying and adapting raw data to make it usable.

It involves cleaning, enriching, or converting information from one or multiple sources to prepare it for storage or analysis.

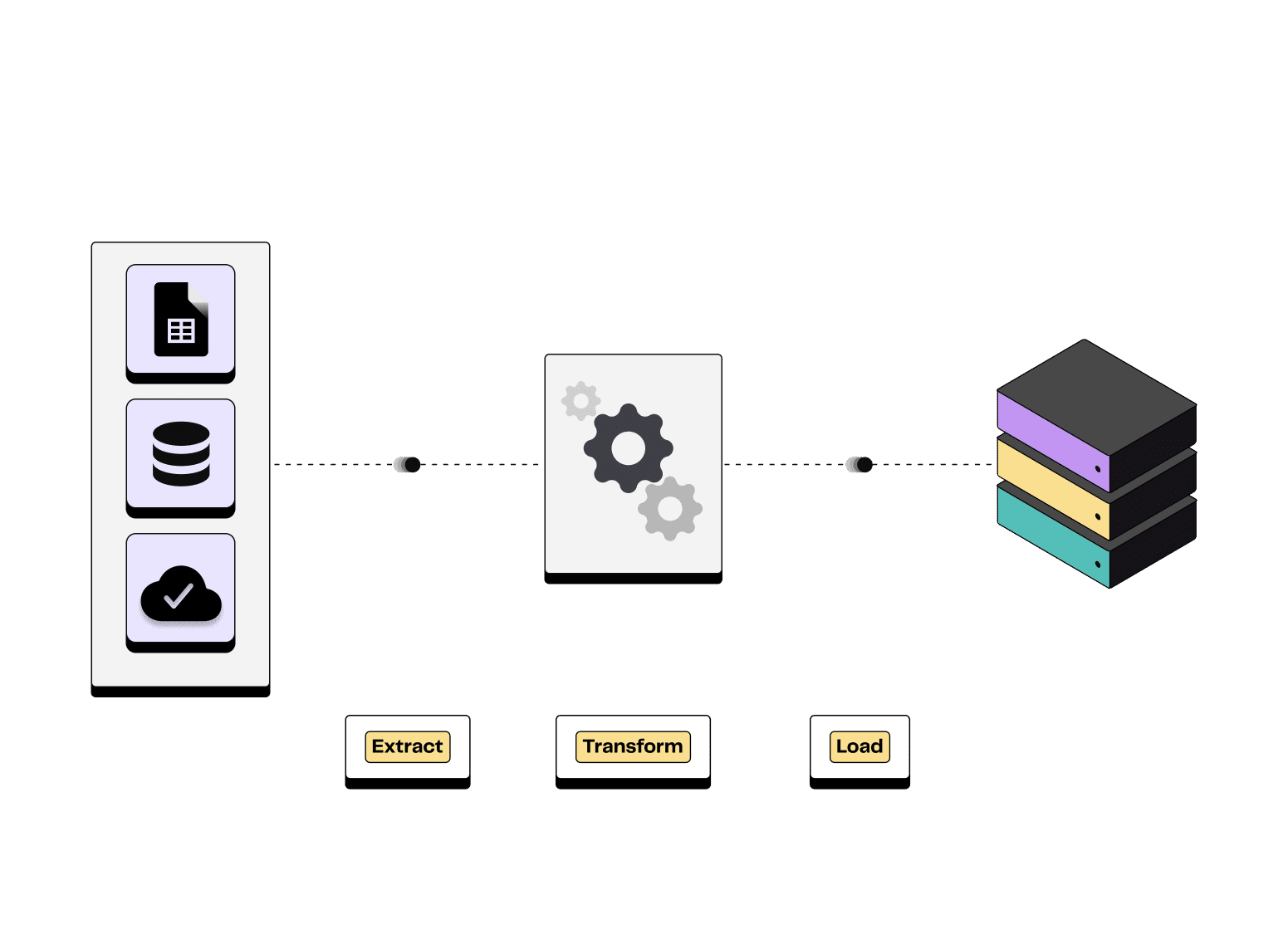

It represents the "T" in ETL and ELT ingestion processes, which we will discuss later. In the first case it occurs before loading (ETL = Extract, Transform, Load), in the other it occurs after.

What is it for?

As an essential component of a Modern Data Stack (MDS), data transformation improves the quality and usability of information. It avoids incomplete, heterogeneous or inconsistent data.

It helps businesses:

Ensure reliable analysis by eliminating errors and duplicates.

Save time by providing business teams with coherent and structured data.

Optimise performance by fully leveraging data assets.

💡 Example : An e-commerce company wants to personalise its marketing campaigns based on customer purchasing behaviour. To do so, it needs to analyse order histories and identify relevant segments (new customers, repeat buyers, inactive clients).

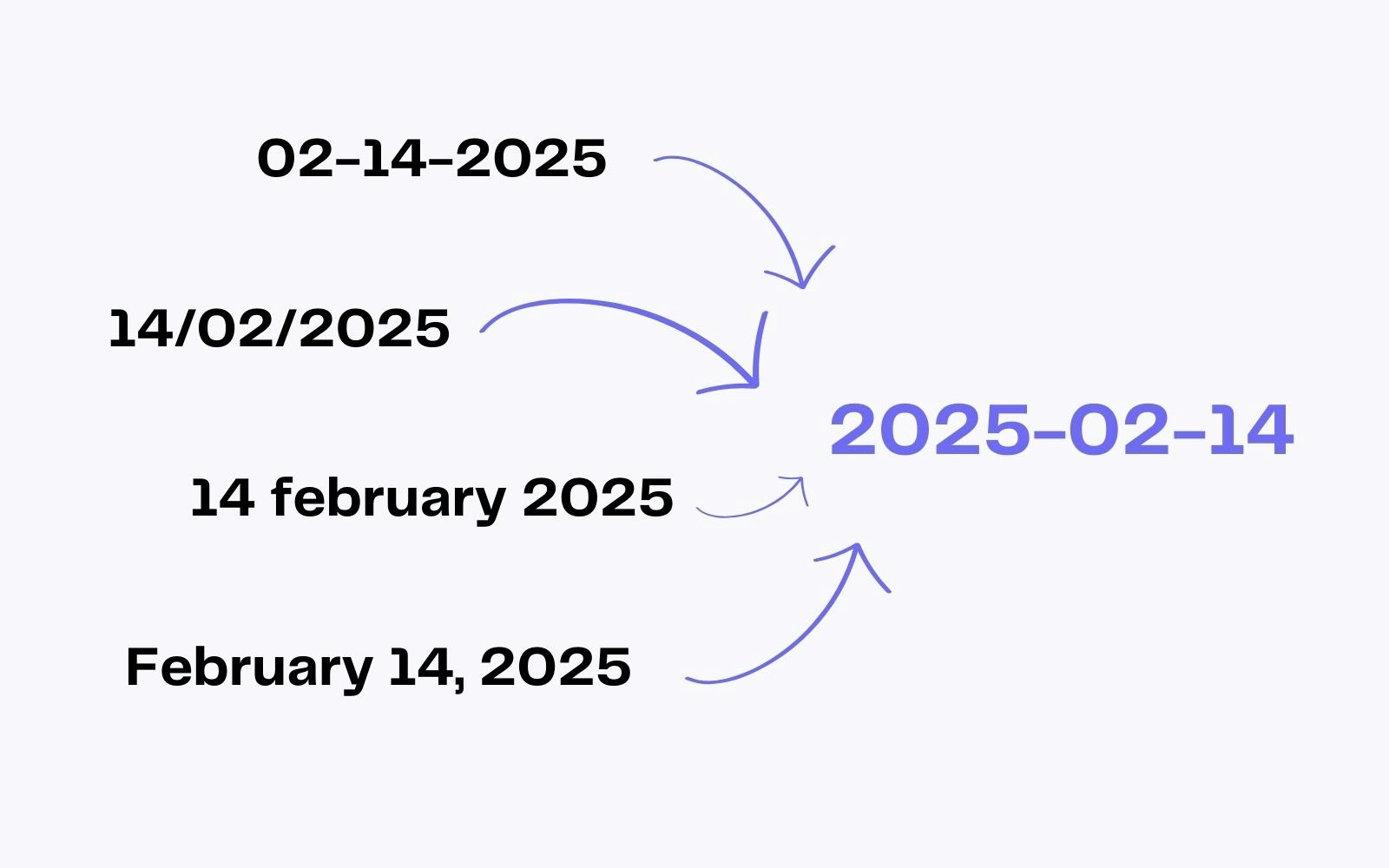

Before using this data, it must be transformed. For instance, purchase dates stored in different formats must be standardised to calculate the average time between two orders.

Transformation of the date into a standard format

Transformation, ingestion, orchestration, observability: what are the differences?

It’s essential to differentiate data transformation from other components of the MDS.

Data Ingestion : the process of collecting and importing data from multiple sources into a centralised system.

Orchestration : the automation of data transformation workflows and movement between different tools.

Observability : the real-time monitoring of data quality, availability, and reliability to detect errors, inconsistencies, or integration issues.

Main steps

Data transformation follows a series of technical steps to ensure data is clean, consistent, and usable.

1️⃣ Discovery: identifying, collecting, and analysing source data to understand structure and formats.

2️⃣ Cleaning: detecting missing values and inconsistencies. Removing errors and duplicates to ensure data reliability.

3️⃣ Mapping: aligning source data fields with those in the target system (e.g., mapping "Purchase Date" in a CSV file to "purchase_date" in an SQL database).

4️⃣ Code Generation: defining transformation rules using SQL, Python, R, or ETL/ELT pipeline tools.

5️⃣ Execution: running the process to modify and load data according to the defined rules.

6️⃣ Validation & control: checking data quality and ensuring transformations were applied correctly.

Types of data transformation

Data transformation takes various forms depending on business needs. Some methods ensure data consistency, while others standardise or enrich information for better usability.

Here are three common types:

Standardisation

This process ensures uniform formats and structures for compatibility across systems.

💡 Example: Converting all dates to the YYYY-MM-DD format or normalising country names (France instead of FR or FRA).

Data aggregation

Aggregation involves grouping and summarising data to make it more readable and usable.

💡 Example: Calculating average revenue per customer from e-commerce transactions.

Data enrichment

This transformation adds new information from another source to refine the analysis and improve decision-making.

💡 Example: Associating a customer list with an engagement score based on purchase history and interactions.

These three transformations are among the most common, but there are many others.

Transformation type | Definition |

|---|---|

Data cleaning | Removing errors, duplicates, and outliers to ensure quality. |

Mapping | Aligning fields from different sources to ensure consistency. |

Normalisation | Scaling values for comparability or analysis. |

Joining | Merging multiple tables or data sources based on common keys. |

Splitting | Separating one column into multiple fields to refine data organisation. |

Encoding | Converting data from one format to another, to ensure compatibility with different systems or to optimise processing. |

Discretisation | Converting continuous numerical values into categories or intervals to facilitate analysis and modelling. |

Encryption | Securing sensitive data using cryptographic techniques. |

Types of data transformation

Benefits of data transformation

Data transformation offers many benefits to businesses. Here are the main benefits:

👉 Improving data quality and reliability

Data transformation eliminates inconsistencies, duplications and errors in the raw data.

By standardising and cleansing the data, you obtain a more reliable and higher quality information base. This improvement translates into accurate analyses and more informed decision-making.

👉 Optimising analytics and AI performance

Artificial intelligence models and analysis tools need well-structured data to function effectively.

Data transformation creates more coherent datasets that are better suited to machine learning algorithms. This improves the accuracy of predictive models and the relevance of insights.

👉 Enabling integration between different tools and platforms

Companies use several systems (CRM, ERP, CDP, etc.). By harmonising formats, data transformation ensures compatibility between these different tools. This makes it easier to share and exploit data between departments.

👉 Ensuring compliance and governance

With regulations such as the GDPR and CCPA, businesses need to ensure that data is handled securely and in compliance. Transformation makes it possible to apply security, anonymisation and encryption rules that reduce the risks associated with managing sensitive information.

Tools and technologies

Data transformation tools are an integral part of the essential solutions in a modern data stack.

ETL and ELT: transforming before or after loading

The ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes are two distinct methods for extracting and transforming data. Although their acronyms are similar, they differ in the order of operations.

Each solution is based on data pipelines and is tailored to specific needs.

ETL : Data is extracted, transformed and then loaded into a target system (e.g. a data warehouse).

This is an ideal model for environments requiring strict data governance and prior transformation before storage.

💡 The main suppliers: Talend, IBM Datastage, Airbyte, Stitch

Extract Transform Load process illustration

ELT : In this approach, data is first stored and then transformed directly in the target system.

The ELT process is adapted to modern cloud environments, with advanced computing capabilities. It offers greater flexibility and scalability for processing large volumes.

💡 The main solutions: Fivetran, Apache Hadoop, Google Dataform

Other transformation tools exist on the market: dbt (Data Build Tool), Trifacta, Rockset, Trino, etc.

Data warehouses & data lakes

Data warehouses and data lakes are two types of storage solutions that play an essential role in data transformation.

Data warehouse: Structured storage optimised for analysis. Data is pre-transformed using a tool like dbt to ensure consistency and fast access.

💡 The main data warehouses: Snowflake, Google BigQuery, Amazon Redshift, etc.

Data lake : raw, flexible storage that stores untransformed data in its native format. Transformations are applied on demand via frameworks, depending on analysis needs.

💡 Main solutions: AWS S3, Microsoft, Azure data lake, Apache Hadoop, Oracle Intelligent data lake.

Challenges and best practices

Data transformation is a strategic lever for developing a company's business. It also presents a number of challenges. A rigorous approach can avoid costly mistakes and optimise processes.

Ensuring data quality

Incomplete, obsolete or siloed data limits its usability. To guarantee data reliability :

Automate cleansing (errors, duplicates, missing values).

Centralise data management to avoid silos.

Ensuring the scalability of pipelines

Data volumes are constantly increasing. An unsuitable infrastructure risks slowing down processing and generating high costs.

Opt for cloud architectures that allow resources to be adapted according to requirements.

Monitor performance and adjust processes to avoid bottlenecks.

Ensuring compliance with regulations

GDPR, CCPA... Companies must comply with strict rules to protect personal data.

Implement access and control rules (encryption, anonymisation).

Ensure traceability of transformations with detailed logs.

Work with the legal department to adapt to regulatory changes.

💡 Case in point: A fintech managing sensitive data implements a strict governance policy. Objective: avoid duplication of personal information and guarantee GDPR compliance by automating encryption of customer data.

Conclusion and trends

The volume of digital data is exploding: this year we will produce three times as much as in 2020 (Statista). Making the most of this ‘new black gold of the 21st century’ has become a major challenge for businesses.

Transformation is a central element of any modern data infrastructure. It helps to improve the quality of information, optimise analyses and ensure regulatory compliance. However, many challenges remain: data silos, infrastructure scalability, respect for confidentiality, etc.

In this context, composable CDPs offer a cost-effective, scalable and modular solution. They offer unrivalled flexibility for centralising, transforming and activating data already in the data warehouse. Combined with machine learning technologies, these platforms facilitate data activation.

Predictive AI reinforces this dynamic by enabling companies to anticipate behaviour and personalise their customer interactions.

👉 Looking to make the most of your data and improve your marketing performance and sales strategy? Find out how DinMo's composable CDP can help you unify and activate your data to extract maximum value.

FAQ

How does data transformation improve machine learning models?

How does data transformation improve machine learning models?

Data transformation plays a crucial role in machine learning by ensuring that raw data is clean, structured, and ready for analysis. Before training a model, data must be standardised, normalised, and enriched to enhance data quality.

Removing missing values, handling outliers, and converting categorical variables into numerical formats allow algorithms to make accurate predictions.

Additionally, transformed data improves feature engineering, helping businesses extract meaningful insights and refine decision-making. Properly processed data enhances model accuracy, reduces bias, and increases efficiency. Whether in data analytics or predictive modeling, structured data pipelines ensure that models operate on reliable datasets, leading to better performance in real-world applications.

How can businesses ensure data quality during the transformation process?

How can businesses ensure data quality during the transformation process?

Maintaining high data quality during data transformation is essential for reliable decision-making. Businesses should start by identifying inconsistencies, missing values, and duplicate records before applying transformation rules.

Implementing automated data validation techniques ensures accuracy throughout the transformation process. ETL and ELT pipelines help structure raw data, while metadata management maintains consistency across systems. Continuous data governance frameworks enforce regulatory compliance and improve overall business efficiency.

By integrating data analytics and monitoring tools, companies can track transformation errors and apply corrective actions in real-time. High-quality, transformed data supports better insights, leading to more effective marketing strategies, customer segmentation, and operational efficiency.