The ultimate guide to data orchestration

7min • Last updated on Apr 17, 2025

Olivier Renard

Content & SEO Manager

A study by Sigma reveals a surprising statistic: 76% of professionals claim their company is data-driven, but 39% of business experts admit they don’t really know what that means.

As data volumes have grown significantly over the past five years, companies still struggle to establish a modern data organisation to harness them effectively.

To make full use of this potential, data must be structured and seamlessly routed between systems. This is where data orchestration comes in.

Key takeaways:

Data orchestration automates the movement and transformation of information across the different systems of an organisation.

It involves centralising, cleaning and organising data in real time to make it usable and actionable.

A wide range of tools support orchestration, depending on technical needs and data maturity levels.

Effective orchestration improves data quality, speeds up decision-making, and reinforces compliance.

🔎 What does data orchestration involve, and why is it essential for a data-driven organisation? Discover the core principles, benefits, and how to implement it successfully. 🚀

What is data orchestration?

Data orchestration refers to all the processes involved in moving, transforming, and organising data within a business.

Its purpose is to automate repetitive tasks and coordinate flows across various sources and tools in the data ecosystem.

As the name suggests, it acts like an orchestra conductor. It plans each step: collection, standardisation, transformation, and finally delivery to analysis or activation tools.

Its aim? To ensure the right data reaches the right place, at the right time, in the right format – overseeing the entire flow from end to end. It goes far beyond a simple point-to-point integration.

A broader concept

Although often confused, orchestration is a broader concept than pipeline management. It coordinates all flows to ensure quality, consistency, and availability at every stage of the data lifecycle – up to activation.

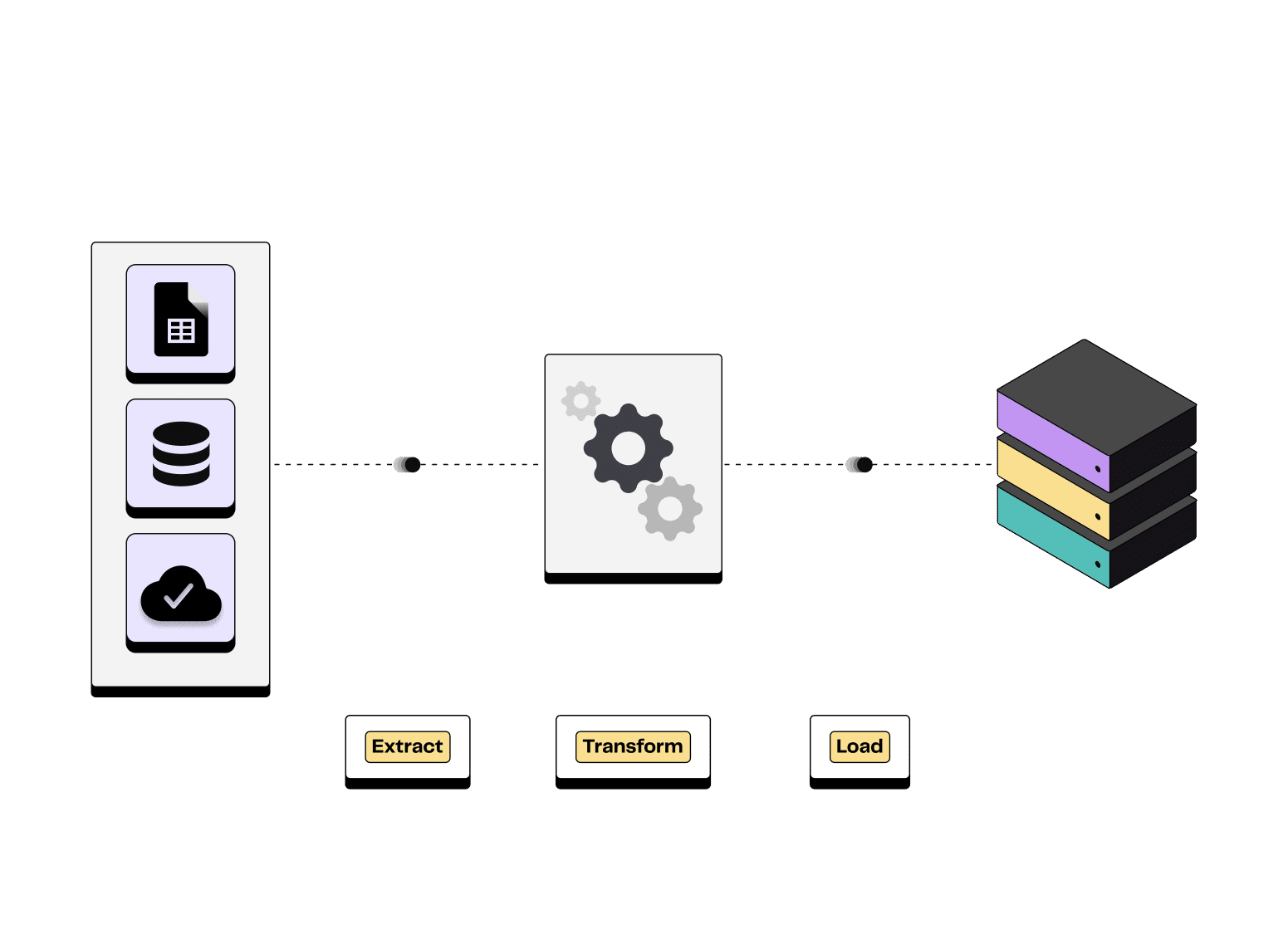

It also differs from ETL (Extract, Transform, Load), which extracts data, transforms it, and loads it into a data warehouse. ETL typically operates in batch mode, limiting reactivity and scalability.

Extract Transform Load process illustration

Orchestration sequences every step – from ingestion to activation. Let’s now explore how it works in practice, step by step.

How does data orchestration work?

Data orchestration relies on a logical sequence of automated tasks. It follows three main stages, from data collection to activation in business tools.

1️⃣ Data collection and organisation

Your data generally comes from various sources: CRM, social media, websites, offline systems, etc. The first goal is to break down silos and prepare a coherent foundation for analysis.

This means centralising these flows in a single cloud-based environment, such as Google BigQuery or Snowflake. But first, the data must be standardised – which leads us to the next stage.

2️⃣ Transformation and standardisation

Once collected, the data must be cleaned, formatted, and possibly enriched.

💡 For example, the same date might appear as “01/03/2025” in one tool and “2025-03-01” in another.

This stage involves applying business rules and data quality processes (deduplication, normalisation, validation, etc.). The aim is to obtain reliable and consistent data that teams can readily use.

3️⃣ Activation in business tools

Now the data must be made ready for use. It is automatically sent to visualisation tools or marketing platforms.

The activation phase allows for real-time campaign personalisation and informed decision-making.

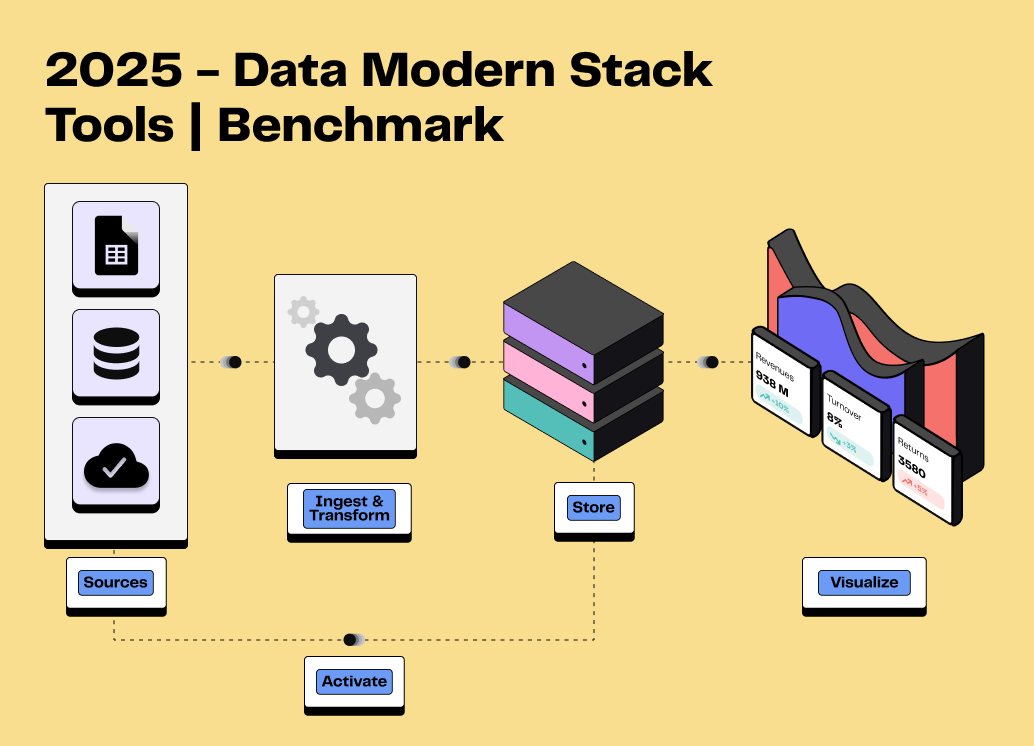

Data orchestration stages

Orchestration, automation, observability: What’s the difference?

These three concepts are complementary but serve different roles.

Concept | Definition & principle | Example |

|---|---|---|

Automation | Performing a task without manual input. | Triggering a report every Monday at 8 a.m. |

Orchestration | Coordinating multiple repetitive tasks within an automated workflow. | Extracting web order data, transforming it, loading it into a data warehouse, then sending it to an email tool for a personalised campaign. |

Observability | Continuously monitoring and analysing data integrity and availability. | Detecting a pipeline failure using an alerting system. |

Orchestration vs Automation vs Observability

While observability focuses on outcomes, orchestration is concerned with task execution according to a process.

What are the benefits of orchestration?

Data orchestration automates repetitive tasks and streamlines exchanges between tools, ensuring data is accurate and up to date. The benefits are many:

Time-saving and workflow optimisation: Manual processes are time-consuming and prone to errors. Orchestration automates key stages: collection, transformation, loading, activation. The result: smoother, better-structured processes.

Data quality and reliability: Poorly formatted or outdated data can skew analysis. Orchestration applies validation rules, normalisation, and quality control. It guarantees clean, usable data.

Breaking down silos and centralisation: Data is often scattered across systems (CRM, websites, ads...). Orchestration gathers it in a data warehouse, which becomes your single source of truth.

Compliance and security: Orchestration supports GDPR compliance and other regulations. It ensures data traceability, applies governance rules, manages access, and secures system communication.

How to build an effective strategy

Orchestration requires careful planning, the right tools, and precise execution. Here are the key steps to success.

Define needs and objectives

Start by clarifying what you want to achieve based on your company’s goals:

What type of data needs orchestrating? From which sources?

Which teams will use it (marketing, product, finance...)?

Which actions should be automated or simplified?

This step helps define the functional scope and prioritise value: time-saving, reliability, activation.

Choose the right tools for your infrastructure

There’s a wide range of tools – open-source, cloud, no-code/low-code. Discover the main applications on the market.

Vendor | Features | Details |

|---|---|---|

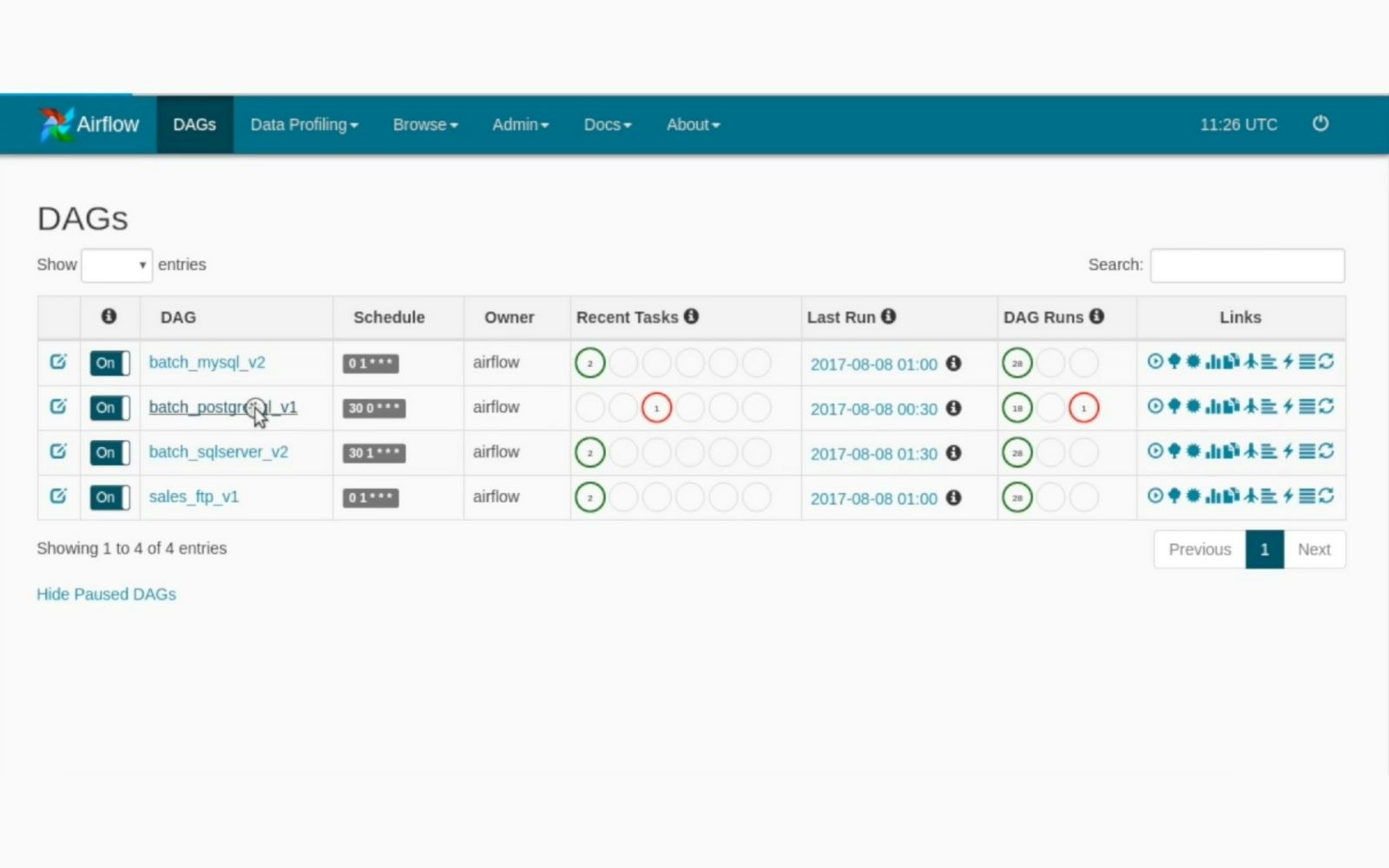

Apache Airflow | Popular open-source tool for orchestrating complex workflows via Python. | Highly flexible but requires strong technical skills. |

Dagster | Dagster is a modern open-source orchestrator for building, testing and monitoring pipelines. | It stands out for its data asset-oriented approach and strong focus on observability, while remaining developer-oriented (Python). |

Prefect | A modern alternative to Airflow; supports dynamic workflows and better error handling. | It can be used in open-source or in the cloud for greater simplicity. |

Rivery | Cloud-based, ready-to-use solution with integrated orchestration and automation. | Rivery started out as a data integration tool, but has gradually added orchestration and observability features. |

The main data orchestration tools

Apache Airflow (source: Ryan Bark)

👉 The best tool depends on your data maturity, internal resources, and technical constraints.

Test and implement automated workflows

Once your tool is selected, define the sequence of tasks to orchestrate:

Data collection from various sources (CRM, web, analytics, etc.)

Transformation and enrichment using business logic

Activation in tools (emailing, BI, ads platforms...)

Workflows can then be triggered automatically by specific events.

💡 The DinMo customer data platform goes further by supporting real-time marketing activations and data synchronisation.

Monitor and optimise continuously

Effective orchestration relies on regular monitoring of workflows:

Detecting errors or delays

Verification of the quality of the processed data

Optimising processing times and costs

Challenges and best practices

Implementing data orchestration can face several obstacles:

Integration issues between tools or data formats: Complex environments and heterogeneous systems can make data integration challenging. Each tool in your stack may use different formats (JSON, CSV, proprietary APIs, etc.).

High implementation costs not anticipated: Rolling out an orchestration tool involves time, human resources, and sometimes a dedicated cloud infrastructure (Google, Azure, AWS). Without a clear framework, budgets can quickly spiral out of control.

Scalability issues without the right architecture: As data volumes grow, workflows must evolve accordingly. An architecture that lacks scalability may slow down processing and overload your data pipelines.

Lack of internal expertise or coordination: Once workflows are in place, they need to be monitored, maintained, and optimised. Team coordination is also one of the keys to a successful orchestration project.

Best practices to avoid pitfalls:

Document every workflow: Keep a clear record of each orchestration step (sources, transformations, destinations). This simplifies maintenance and knowledge sharing.

Prioritise high-value use cases. Start with workflows that have a direct business impact: automated reporting, CRM updates, marketing sync.

Automate tasks step by step: This helps test the robustness of processes and prevents errors in production. That’s why we recommend a modular approach.

Choose tools compatible with your current stack: Opt for orchestration tools that integrate easily with your infrastructure (cloud, data warehouse, BI tools) to minimise technical friction.

Involve business and data teams from the start: Engaging end-users early on helps define needs accurately, avoid unnecessary workflows, and encourage adoption of the deployed solutions.

Conclusion

Data orchestration enables organisations to structure, automate, and secure data flows between tools. It plays a central role in a modern data stack.

By streamlining the collection, transformation, and activation of data, it improves the quality of analysis, team responsiveness, and campaign performance.

💡 Make the most of the data stored in your cloud data warehouse with DinMo. Segment and activate your customer data across your marketing tools, with zero technical complexity.

FAQ

How can you measure the ROI of a data orchestration project?

How can you measure the ROI of a data orchestration project?

The return on investment (ROI) of a data orchestration strategy can be assessed on several levels. It’s useful to compare metrics before and after orchestration is implemented. You can calculate:

- The time saved through workflow automation

- The reduction in processing errors

- Performance improvements in data activation.

To go further, you can track indicators like data availability rate and compare them with the costs associated with the project.

Overall, effective orchestration helps businesses make better use of their data and generate greater value.

What are the concrete use cases for data orchestration in marketing?

What are the concrete use cases for data orchestration in marketing?

Data orchestration connects different marketing tools and automates data flows. It is extremely useful for campaign personalisation, lead scoring and audience segmentation.

For example, data from the website is centralised in a data warehouse. Once it’s ready to use, it can be transferred to a CRM or emailing platform to launch a targeted campaign.

Orchestration automatically combines data from multiple channels and makes it available for analysis. This simplifies performance tracking and supports better decision-making.

Thanks to orchestration, marketers can rely on up-to-date, reliable data available in real time. A Customer Data Platform (CDP) supports this approach by enabling more efficient and consistent actions across the entire customer journey.

How can data orchestration workflows be secured?

How can data orchestration workflows be secured?

Securing workflows relies on applying best practices. Of course, it’s essential to control data access and manage permissions based on user roles.

It is also important to encrypt data, both at rest and in transit. An orchestration solution should offer secure authentication protocols, audit logs and monitoring features.

It's also recommended to test pipelines in a sandbox environment before deployment. A secure, compliant cloud architecture (GDPR, CCPA, etc.) makes it easier to manage orchestration workflows safely and efficiently.